Setting up active-standby load balancing on an F5 appliance

Tag:

Recently I bumped into an interesting problem. One of our clients had an interesting problem of not being able to use two proxy servers at once. The symptoms were that whenever both linux-based proxy servers were booted up and working, connections didn't get through. The proxy servers were set up to handle incoming requests from the inside network, and proxy them to a specific 3rd party network. By saying "connections didn't get through" I mean that connections were initiated towards the 3rd party, out to the Internet, but no response was received.

Obviously there was no IP collision, or other basic error, the process of putting in a second linux box into a network covers this. It however didn't matter which box was shut down, the connection started working immediately. So this confirmed that on their own, each box was fully functional. Also, configuring them, reaching them and troubleshooting them showed no problem, so that rules out network connectivity errors on a basic level.

So the topology looked like this: the two proxies had internal (RFC1918) IP addresses, the connection was then PATted to the Internet by a Cisco ASA. This was the outbound path. Inbound, however was a bit more complicated. Requests came from the internal network, through an F5 appliance. The F5 appliance had a VIP (Virtual IP) set up and load balanced requests to the two proxy servers. The problem was on the outbound path, because the third party saw both proxy servers coming from the same public IP (hence the PAT). For them it was essential that the two proxy servers came from two different IP addresses. This is when it started to become complicated. The reason for the PAT was that on the ASA, we only had a /29 pool - this means a group of eight IP addresses, of which six was usable. And all six was actually in use. There was a possibility to free up one IP, but definitely not two, and the expansion of the /29 was totally out of the question.

The second possible solution would then be to remove one of the proxy servers, as one is perfectly capable of handling the load, no need for two. However, this was a suboptimal path, as the second server was needed for resiliency. Seems like a checkmate.

After brainstorming, we decided to do this in a different approach. The way the F5 load balances requests by default, is to do a round-robin method of distributing the requests. There are of course other methods as well, this is just the default, and also the simplest to understand. What is common in all the available options? All of them are active-active setups. We were trying to get an active-standby setup, in which the primary server would get all the load, while the standby gets nothing. When the primary fails, the secondary would start getting all the requests until the primary comes back. In this way, at any given time only one proxy is actively transferring traffic, but we have also kept the resiliency.

So how is active standby set up on an F5 appliance? Meet the Priority Group. A priority group is a property of a pool. Priority groups are numbered from zero and each number represents a different priority group. The higher the number, the higher the priority. Multiple servers can be part of the same priority group. The purpose of having priority groups is that traffic is only load balanced between members that belong to the highest numbered priority group. If all servers are dead within this group, then the F5 redirects the requests to the members in the second highest group and so on. If you want an active-standby setup, you need to create two priority groups, each with one server in it. That's it. Requests will be directed to the one server in the highest priority group, and when that server dies, the next group with the other server will step up.

Here is how to configure this on BIP IP 10.2.2. Go to Local traffic / Pools / Pool list on the web UI, and select the already existing pool which you want to modify. You will be presented with the Properties page, use the middle tab 'Members'.

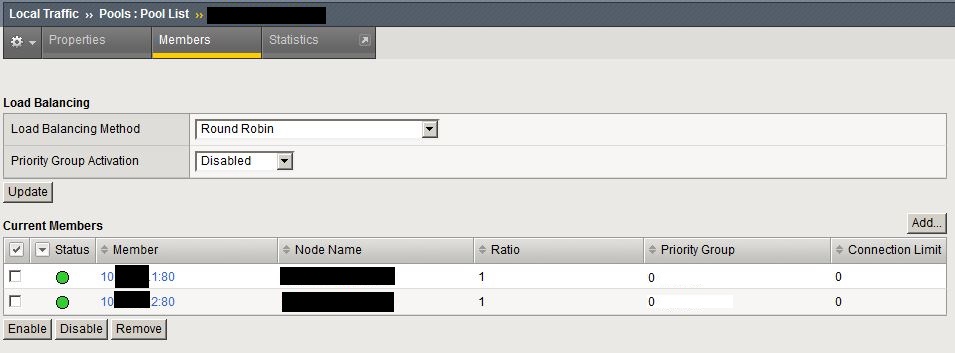

This is where the magic happens. In a pool, if you just add IP addresses and all the basic stuff, all members belong to the same priority group, which is group 0. Click on each IP address, and make the one you want to be primary a member of priority group 2, the other will belong to priority group 1. Ratio can remain 1 in both cases, it doesn't play a role in this.

Hitting update will take you back to the previous page with the pool member configuration. Although the servers now belong to different priority groups, this still has no effect, because priority groups are not yet enabled. So just under load balancing method on the top of the page, you can see priority group activation is set to disabled. Modify this setting, set it to less than, and enter 1 to the text field next to it, where it says available members.

This will mean that as long as there is one single server up in a given priority group, the lower numbered groups are not used at all. Hit update, and start watching the stats, this is the third tab on the top.

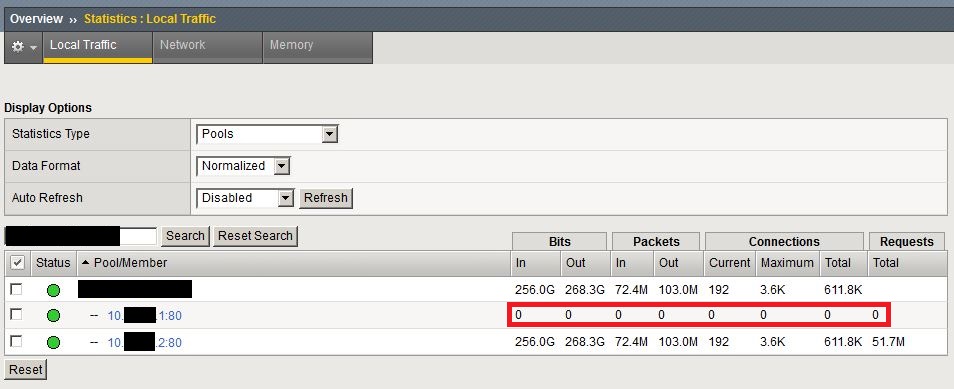

Keep in mind, that traffic is not forced to fail over immediately, but as a slow process. Because this setup is transparent to the requests, the F5 box makes it a longer process. Therefore new requests are handled in the way it just had been set up, but old requests are kept where they are. This is to ensure that no connection is broken because of this setting. In other words: the above configuration only applies to new traffic. So keep an eye on the current connection count, the standby server will slowly converge to zero, as connections finish their jobs and new ones are directed to the active node.

When troubleshooting, you need to make sure that the standby counter remains zero at all times. This means that the active server was at all times capable of handling the load and was not failing at any given point. If connections start to build up on the standby server, that means that the active had failed (or at least, the F5 thinks that) and the next available priority group is activated.

Don't forget to make sure both servers are able to get out to the internet. In my case, when a failover occures, the one-to-one NAT on the ASA (remember, I told you we could free up one IP address) needs to be reconfigured to NAT requests from the standby. This is still a suboptimal way of doing things, but still better than having only a single server.

- Log in to post comments